Migrating to Hetzner with Coolify

What I did

A few weeks ago, I got to watch Jeff Triplett migrate DjangoPackages from DigitalOcean to Hetzner1 using Coolify. The magical world of Coolify made everything look just so ... easy. Jeff mentioned that one of the driving forces for the decision to go to Hetzner was the price, that is Hetzner is cheaper but with the same quality.

Aside

To give some perspective, the table below shows a comparison of what you I had and what I pay(paid) at each VPS

| Server Spec | Digital Ocean Cost | Hetzner Cost | Count |

|---|---|---|---|

| Managed Database with 1GB RAM, 1vCPU | $15.15 | NA | 2 |

| s-1vcpu-2gb | $12 | NA | 1 |

| s-1vcpu-1gb | $6 | NA | 3 |

| cpx11 | NA | $4.99 | 2 |

| cpx21 | NA | $9.99 | 2 |

| cpx31 | NA | $17.99 | 1 |

With Digital Ocean I was paying about $72.50 per month for my servers. This got me 2 Managed Databases (@$15 each) and 4 Ubuntu servers (1 s-1vcpu-2gb and 3 s-1vcpu-1gb).

Based on my maths for January I should see my Hetzner bill be about $61 with the only downside being that I have to 'manage' my databases myself ... however, with Digital Ocean I always felt like I was playing with house money because I didn't have the paid for backups. Now, with Hetzner, I have backups saved to an S3 bucket (and Coolify has amazing docs for how to set this up!)

End Aside

Original State

I had 6 servers on Digital Ocean

- 3 production web servers

- 1 test web server

- 1 managed database production server

- 1 managed database test server

This cost me roughly $75 per month.

Current State

- 2 production web servers

- 1 test web server

- 1 production database server

- 1 test database server

This cost me roughly $63 per month.

Setting Up Hetzner

In order to get this all started I need to create a Hetzner account2. Once I did that I created my first server, a CPX11 so that I could install Coolify.

Next, I need to clean up my DNS records. Over the years I had DNS managed at my registrar of choice (hover.com) and within Digital Ocean. Hetzner has a DNS server, so I decided to move everything there. Once all of my DNS was there, I added a record for my Coolify instance and proceeded with the initial set up.

In all I migrated 9 sites. They can be roughly broken down like this

Coolify: setting up projects, environments, resources

Coolify has several concepts that took a second to click for me

It took me some time reading through the docs but once it clicked I ended up segregating my projects in a way that made sense to me. I also ended up creating 2 environments for each project:

- Production

- UAT

Starting with Nixpacks

Initially I thought that Coolify would only support Docker or docker-compose files, but there is also an option for static sites, and Nixpacks. It turns out that Nixpacks were exactly what I wanted in order to get started.

NB: There is a note on the Nixpacks site that states

"⚠️ Maintenance Mode: This project is currently in maintenance mode and is not under active development. We recommend using Railpack as a replacement."

However, Railpack isn't something that Coolify offered so 🤷🏻♂️

I have a silly Django app called DoesTatisJrHaveAnErrorToday.com3 that seemed like the lowest risk site to start with on this experiment.

Outline of Migration steps

- Allow access to database server from associated Hetzner server, i.e. Production Hetzner server needs to access Production Digital Ocean managed database server, UAT Hetzner Web server needs access to UAT Digital Ocean managed database server

- Set up hetzner.domain.tld in DNS record, for example, hetzner.uat.doestatisjrhaveanerrortoday.com

- Set up site in Coolify in my chosen Project, Environment, and Resource. For me this was Tatis, UAT, "Does Tatis Jr Have An Error UAT"

- Configure the General tab in Coolify. For me this meant just adding an entry to 'Domains' with

https://hetzner.uat.doestatisjrhaveanerrortoday.com - Configure environment variables 4. 5

- Hit Deploy

- Verify everything works

- Update the

General>Domainsentry to havehttps://hetzner.uat.doestatisjrhaveanerrortoday.comandhttps://uat.doestatisjrhaveanerrortoday.com - Update DNS to have UAT point to Hetzner server

- Deploy again

- Wait ... for DNS propagation

- Verify

https://uat.doestatisjrhaveanerrortoday.comworks - Remove

https://hetzner.uat.doestatisjrhaveanerrortoday.comfrom DNS and Coolify - Deploy again

- Verify

https://uat.doestatisjrhaveanerrortoday.comstill works - Remove the GitHub Action I had to deploy to my Digital Ocean UAT server

- Repeat for Production, replacing

https://hetzner.uat.doestatisjrhaveanerrortoday.comwithhttps://hetzner.doestatisjrhaveanerrortoday.com

Repeat the process for each of my other Django sites (all 4 of them)

Switching to Dockerfile/docker-compose.yaml

This worked great, but

- The highest version of Python with Nixpacks is Python 3.13

- The warning message I mentioned above about Nixpacks being in "Maintenance Mode"

Also, I had a Datasette / Django app combination that I wanted to deploy, but couldn't figure out how with NIXPACKS. While the Django App isn't where I want it to be, and I'm pretty sure there's a Datasette plugin that would do most of what the Django app does, I liked the way it was set up and wanted to keep it!

Writing the Dockerfile and docker-compose.yaml files

I utilized Claude to assist with starting me off on my Dockerfile and docker-compose.yaml files. This made migrating off of the NIXPACK a bit easier than I thought it would be.

I was able to get all of my Django and Datasette apps onto a Dockerfile configuration but there was one site I have that scrape game data from TheAHL.com which has an accompanying Django app that required a docker-compose.yaml file to get set up6.

One gotcha I discovered was that the Coolify UI seems to indicate that you can declare your docker-compose file with any name, but my experience was that it expected the file to be called docker-compose.yaml not docker-compose.yml which did lead to a bit more time troubleshooting that I would have liked!

Upgrading all the things

OK, now with everything running from Docker I set about upgrading all of my Python versions to Python 3.14. This proved to be relatively easy for the Django apps, and a bit more complicated with the Datasette apps, but only because of a decision I had made at some point to pin to an alpha version 1.0 of Datasette. Once I discovered the underlying issue and resolved it, again, a walk in the park to upgrade.

Once I was on Python 3.14 it was another relatively straight forward task to upgrade all of my apps to Django 6.0. Honestly, Docker just feels like magic given what I was doing before and just how worried I'd get when trying to upgrade my Python versions or my Django versions.

Migrating database servers

Now I've been able to wind down all of my Web Servers, the only thing left is my managed database servers. In order to get them set up I set up pgadmin (with the help of Coolify) so that I didn't have to drop into psql in the terminal on the servers I was going to use for my database servers.

Once that was done I created backups of each database from Production and UAT on my MacBook so that I could restore them to the new Hetzner servers. To get the backup I ran this

docker run --rm postgres:17 pg_dump "postgresql://doadmin:password@host:port/database?sslmode=require" -F c --no-owner --no-privileges > database.dump

I did this for each of my 4 databases. Why did I use the docker run --rm postgres:17 pg_dump instead of just pg_dump? Because my MacBook had Postgres 16 while the server was on Postgres 17 and this was easier than upgrading my local Postgres instance.

Starting with UAT

I started with my test servers first so I could break things and have it not matter. I used my least risky site (tatis) first.

The steps I used were:

- Create database on Hetzner UAT database server

- Restore from UAT on Digital Ocean

- Repeat for each database

- Open up access to Hetzner database server for Hetzner UAT web server

- Change connection string for

DATABASE_URLfor tatis to point to Hetzner server in my environment variables - Deploy UAT site

- Verify change works

- Drop database from UAT Digital Ocean database server

- Verify site still runs

- Repeat for each Django app on UAT

- Allow access to Digital Ocean server from only my IP address

- Verify everything still works

- Destroy UAT managed database server

- Repeat for prod

For the 8 sites / databases this took about 1 hour. Which, given how much needed to be done was a pretty quick turn around. That being said, I spent probably 2.5 hours planning it out to make sure that I had everything set up and didn't break anything, even on my test servers.

Backups

One thing about the Digital Ocean managed servers is that backups were an extra fee. I did not pay for the backups. This was a mistake ... I should have, and it always freaked me out that I didn't have them enabled. Even though these are essentially hobby projects, when you don't do the right thing you know it.

Now that I'm on non-managed servers I decided to fix that, and it turns out that Coolify has a really great tutorial on how to set up an AWS S3 bucket to have your database backups written to.

It was so easy I was able to set up the backups for each of my databases with no fuss.

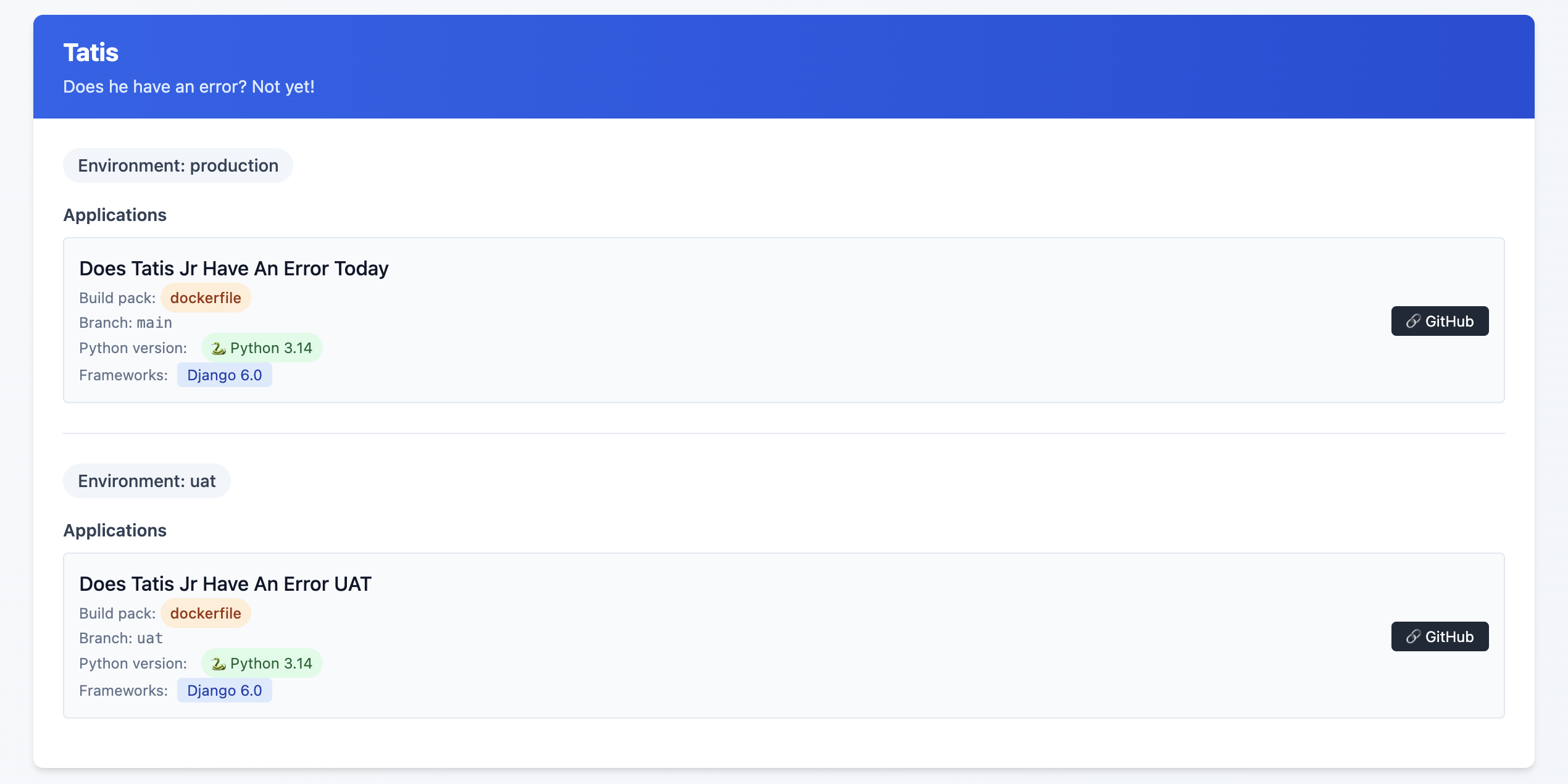

Coolify dashboard thing

I mentioned above that I upgraded all of apps to Python 3.14 and Django versions to 6.0. For all of the great things about Coolify, trying to find this information out on a high level is a pain in the ass. Luckily they have a fairly robust API that allowed me to vibe code a script that would output an HTML file that showed me everything I needed to know about my Applications with respect to Python, Django, and Datasette versions. It also helped me know about my database backup setups as well7!

This is an example of the final state, but what I saw was some Sites on Django 4.2, others on Python 3.10 and ... yeah, it was a mess!

I might release this as a package or something at some point, but I'm not sure that anyone other than me would want to use it so, 🤷♂️

What this allows me to do now

One of the great features of Coolify are Preview Deployments which I've been able to implement relatively easily8. This allows me to be pretty confident that what I've done will work out OK. Even with a UAT server, sometimes just having that extra bit of security feels ... nice.

One thing I did (because I could, not because I needed to!) was to have a PR specific database on my UAT database server. Each database is called {project}_pr and is a full copy of my UAT database server. I have a cron job set up that restores these databases each night.

I used Claude to help generate the shell script below:

#!/bin/bash

# Usage: ./copy_db.sh source_db container_id [target_db]

# If target_db is not provided, it will be source_db_pr

source_db="$1"

container_id="$2"

target_db="$3"

# Validate required parameters

if [ -z "$source_db" ]; then

echo "Error: Source database name required"

echo "Usage: $0 source_db container_id [target_db]"

exit 1

fi

if [ -z "$container_id" ]; then

echo "Error: Container ID required"

echo "Usage: $0 source_db container_id [target_db]"

exit 1

fi

# Set default target_db if not provided

if [ -z "$target_db" ]; then

target_db="${source_db}_pr"

fi

# Dynamic log filename

log_file="${source_db}_copy.log"

# Function to log with timestamp

log_message() {

echo "$(date '+%Y-%m-%d %H:%M:%S') - $*" | tee -a "$log_file"

}

log_message "Starting database copy: $source_db -> $target_db"

log_message "Container: $container_id"

# Drop existing target database

log_message "Dropping database $target_db if it exists..."

if docker exec "$container_id" psql -U postgres -c "DROP DATABASE IF EXISTS $target_db;" >> "$log_file" 2>&1; then

log_message "✓ Successfully dropped $target_db"

else

log_message "✗ Failed to drop $target_db"

exit 1

fi

# Create new target database

log_message "Creating database $target_db..."

if docker exec "$container_id" psql -U postgres -c "CREATE DATABASE $target_db;" >> "$log_file" 2>&1; then

log_message "✓ Successfully created $target_db"

else

log_message "✗ Failed to create $target_db"

exit 1

fi

# Copy database using pg_dump

log_message "Copying data from $source_db to $target_db..."

if docker exec "$container_id" sh -c "pg_dump -U postgres $source_db | psql -U postgres $target_db" >> "$log_file" 2>&1; then

log_message "✓ Successfully copied database"

else

log_message "✗ Failed to copy database"

exit 1

fi

log_message "Database copy completed successfully"

log_message "Log file: $log_file"

Again, is this strictly necessary? Not really. Did I do it anyway just because? Yes!

Was it worth it

Hell yes!

It took time. I'd estimate about 3 hours per Django site, and 1.5 hours per non-Django site. I'm happy with my backup strategy, and preview deployments are just so cool. I did this mostly over the Christmas / New Year holiday as I fought through a cold.

Another benefit of being on Coolify is that I'm able to run

What's next?

Getting everything set up to be mostly consistent is great, but there are still some differences that exist between each Django site that don't need to when it comes to the Dockerfile that each site is using.

I also see the potential to have better alignment on the use of my third party packages. Sometimes I chose package X because that's what I knew about at the time, and then I discovered package Y but never went back and switched it out where I was using package X before.

Finally, I really want to figure out the issue of https on some of the preview deployments.

- This is an affiliate link ↩︎

- This is an affiliate link ↩︎

- More details on why I have this site here ↩︎

- Considerations for Nixpacks. The default version of Python for Nixpacks is 3.11. You can override this with an environment variable

NIXPACKS_PYTHON_VERSIONto allow up to Python 3.13. ↩︎ - Here, you need to make sure that your ALLOWED_HOSTS is

hetzner.uat.doestatisjrhaveanerrortoday.com↩︎ - Do I need this set up? Probably not. I'm pretty sure there's a Datasette plugin that does allow for edits in the SQLite database, but this was more of a Can I do this, not I need to do this kind of thing ↩︎

- There are a few missing endpoints, specifically when it comes to Service database details ↩︎

- one of my sites doesn't like serving up the deployment preview with SSL, but I'm working on that! ↩︎

Django Simple Deploy and Digital Ocean

Today on Mastodon Eric Matthes posted about his library django-simple-deploy and a plugin for it to be able to deploy to Digital Ocean and I am so pumped for this!

I said as much and Eric asked why.

My answer:

all of my Django apps are deployed to Digital Ocean. I have a “good enough” workflow for deployment, but every time I need to make a new server for a new project I’m mostly looking through a ton of stuff to try and figure out what I did last time. This mostly works in that after a few hours I have what I need, but having something simpler would be very nice … especially if/when I want to help someone with their own deployment to DO

The number of times I have wanted to help automate and/or make deployment easier to Digital Ocean is numerous. It would have been extremely helpful for me when I moved off Heroku and onto Digital Ocean as I had no idea how to do the server setup, or deployment or anything remotely related.

A few years later and I still don't feel 100% comfortable with it all of the time, and I'm a "web professional"

Eric's tool is going to make this so much easier and I'm so here for that!

Intro to Djangonaut Space

During my first session with Team Venus today I went through some orientation (for lack of a better term) items with my awesome new Djangonauts. I'm writing it down here because I'm sure I've gone through this same write up before, but darned if I couldn't find it!

There are several ways to contribute to open source, and not all of them are code. Some items I like to call out are:

- Code

- Docs

- Pull Request Review

Many people might think that the only way to contribute is via code, but the other aspects are also super important!

Things I like the Djangonauts (and myself) to keep in mind during the program:

- Contributions aren't a 'race'. Go at your own pace.

- This is a volunteer thing. Life will come up. That's OK. If you have to miss a meeting just let me know.

- Expect to spend about 1 hour per week day (i.e. about 5 hours) per week working on your tickets.

- Fill our your workbooks at the end of each week Sunday; this will help me with getting help for you at these meetings

- Post your wins in Discord!

- Keep in mind the Showcase at the end of the program where you'll have an opportunity to show off what you've been able to do

- Other opportunities:

- Pair programming with other Djangonauts

- If you haven't started a blog, this might be a good time to. Writing up what you've learned can help to make that learning more concrete and can help others in the future (that might include your future self!)

- Writing the Weekly

Updates to Djangosection of the Django News Newsletter - Connect and network with other Djangonauts

The most important thing, I think, is to have fun during this program. This is a unique experience and it should be one that you look back on fondly because you learned some stuff, met some awesome people, and most of all had fun.

Djangonaut Space - Session 4

Next week starts session 4 of Djangonaut Space and I've been selected to be the Navigator for Team Venus with an amazing group of people. As has happened before I go into this with an impossible amount of imposter syndrome lurking over me. While this will be my third time doing this it still feels all new to me and I'm constantly worried that I'm going to "do it wrong".

I have the start of a plan to help with my navigator duties, and I need to get that all written down so that I don't forget what needs to be done and when it needs to be done by! I'm hoping that I'll be able to pick up a ticket and work alongside my Djangonauts as I have done before, but the seasons of life can, and do, have a way of changing quickly.

Perhaps I can just try and focus on getting my one current In Progress ticket wrapped up before diving into a new one 🤔

Anyway, I'm super excited about the prospect of Session 4 and can't wait to "meet" my Djangonauts on our first call next week.

Here's to hoping my imposter syndrome doesn't get the better of me 🚀

Migrating django-tailwind-cli to Django Commons

On Tuesday October 29 I worked with Oliver Andrich, Daniel Moran and Storm Heg to migrate Oliver's project django-tailwind-cli from Oliver's GitHub project to Django Commons.

This was the 5th library that has been migrated over, but the first one that I 'lead'. I was a bit nervous. The Django Commons docs are great and super helpful, but the first time you do something, it can be nerve wracking.

One thing that was super helpful was knowing that Daniel and Storm were there to help me out when any issues came up.

The first set up steps are pretty straight forward and we were able to get through them pretty quickly. Then we ran into an issue that none of us had seen previously.

django-tailwind-cli had initially set up GitHub Pages set up for the docs, but migrated to use Read the Docs. However, the GitHub pages were still set in the repo so when we tried to migrate them over we ran into an error. Apparently you can't remove GitHub pages using Terraform (the process that we use to manage the organization).

We spent a few minutes trying to parse the error, make some changes, and try again (and again) and we were able to finally successfully get the migration completed 🎉

Some other things that came up during the migration was a maintainer that was set in the front end, but not in the terraform file. Also, while I was making changes to the Terraform file locally I ran into an issue with an update that had been done in the GitHub UI on my branch which caused a conflict for me locally.

I've had to deal with this kind of thing before, but ... never with an audience! Trying to work through the issue was a bit stressful to say the least 😅

But, with the help of Daniel and Storm I was able to resolve the conflicts and get the code pushed up.

As of this writing we have 6 libraries that are part of the Django Commons organization and am really excited for the next time that I get to lead a migration. Who knows, at some point I might actually be able to do one on my own ... although our hope is that this can be automated much more ... so maybe that's what I can work on next

Working on a project like this has been really great. There are such great opportunities to learn various technologies (terraform, GitHub Actions, git) and getting to work with great collaborators.

What I'm hoping to be able to work on this coming weekend is1:

- Get a better understanding of Terraform and how to use it with GitHub

- Use Terraform to do something with GitHub Actions

- Try and create a merge conflict and then use the git cli, or Git Tower, or VS Code to resolve the merge conflict

For number 3 in particular I want to have more comfort for fixing those kinds of issues so that if / when they come up again I can resolve them.

- Now will I actually be able to 🤷🏻 ↩︎

DjangoCon US 2024

DjangoCon US 2024

I was able to attend DCUS 2024 this year in Durham from September 22 - September 27, and just like in 2023, it was an amazing experience.

I gave another talk (hooray!) and got to hang out with some truly amazing people, many of whom I call my friends.

I was fortunate in that my talk was on Monday morning, so as soon as my talk was done, I could focus on the conference and less on being nervous about my talk!

One thing I took advantage of this year, that I didn't in previous years, was the 'Hallway Track'. I really enjoyed that time on Monday afternoon to decompress with some of the other speakers in the lobby.

One of the talks that I was able to watch since the conference was Troubleshooting is a Lifestyle 😎 which had this great note: Asking for help is not a sign of failure - it's a strategy.

I am bummed that I missed a few talks live (Product 101 for Techies and Tech Teams, Passkeys: Your password-free future, and Django: the web framework that changed my life) but I will go back and watch them in the next several days and I'm really looking forward to that.

There is a great playlist of ALL of the talks from this year (and previous years) that I highly recommend you search through and watch!

A few others have written about their experiences (Mario Munoz and Will Vincent) and you should totally read those. Some of the

The Food

DCUS via the culinary experience!

Durham has some of the best food and I would go back again JUST for the food. Some of my highlights were

- Cheeni

- Thaiangle of Durham

- Queeny's

- Ponysaurus

- Cocoa Cinnamon

- Pizza Torro

- The conference venue food - fried chicken and peach cobbler were my favorite

The Sprints

During the sprints I was able to work on a few tickets for DjangoPackages12 and get some clarification on a Django doc3 ticket that's I've been wanting to work on for a while now.

The after party in Palm Springs

I left Durham very early on Saturday morning to head back home to Southern California. Leaving a great conference like DjangoCon US can be hard as Kojo has written about.

One upside for me was knowing that a few people from the conference were road tripping out to California and they were going to stop and visit! The following week I had a great dinner with Thibaud, Sage, and Storm at Tac/Quila

Here's a toot on Mastodon with a picture of the 4 of us after dinner

Looking Forward

I just feel so much more clam after the conference, and am super happy.

I'm looking forward to my involvement in the Django Community until the next DjangoCon I'm able to attend4. Some things specifically are:

- Working on Django tickets

- Admin work with Django Commons with Tim, Lacey, Daniel, and Storm

- Working on Django Packages with Jeff and Maksudul

- Djangonaut Space (if and when they need a navigator but just hanging out in the discord is pretty awesome too!)

I'm so grateful for the friends and community that Django has given to me. I'm really hoping to be able to pay it forward with my involvement over the next year until I have a chance to see all of these amazing people in person again

Django Commons

First, what are "the commons"? The concept of "the commons" refers to resources that are shared and managed collectively by a community, rather than being owned privately or by the state. This idea has been applied to natural resources like air, water, and grazing land, but it has also expanded to include digital and cultural resources, such as open-source software, knowledge databases, and creative works.

As Organization Administrators of Django Commons, we're focusing on sustainability and stewardship as key aspects.

Asking for help is hard, but it can be done more easily in a safe environment. As we saw with the xz utils backdoor attack, maintainer burnout is real. And while there are several arguments about being part of a 'supply chain' if we can, as a community, offer up a place where maintainers can work together for the sustainability and support of their packages, Django community will be better off!

From the README of the membership repo in Django Commons

Django Commons is an organization dedicated to supporting the community's efforts to maintain packages. It seeks to improve the maintenance experience for all contributors; reducing the barrier to entry for new contributors and reducing overhead for existing maintainers.

OK, but what does this new organization get me as a maintainer? The (stretch) goal is that we'll be able to provide support to maintainers. Whether that's helping to identify best practices for packages (like requiring tests), or normalize the idea that maintainers can take a step back from their project and know that there will be others to help keep the project going. Being able to accomplish these two goals would be amazing ... but we want to do more!

In the long term we're hoping that we're able to do something to help provide compensation to maintainers, but as I said, that's a long term goal.

The project was spearheaded by Tim Schilling and he was able to get lots of interest from various folks in the Django Community. But I think one of the great aspects of this community project is the transparency that we're striving for. You can see here an example of a discussion, out in the open, as we try to define what we're doing, together. Also, while Tim spearheaded this effort, we're really all working as equals towards a common goal.

What we're building here is a sustainable infrastructure and community. This community will allow packages to have a good home, to allow people to be as active as they want to be, and also allow people to take a step back when they need to.

Too often in tech, and especially in OSS, maintainers / developers will work and work and work because the work they do is generally interesting, and has interesting problems to try and solve.

But this can have a downside that we've all seen .. burnout.

By providing a platform for maintainers to 'park' their projects, along with the necessary infrastructure to keep them active, the goal is to allow maintainers the opportunity to take a break if, or when, they need to. When they're ready to return, they can do so with renewed interest, with new contributors and maintainers who have helped create a more sustainable environment for the open-source project.

The idea for this project is very similar to, but different from, Jazz Band. Again, from the README

Django Commons and Jazzband have similar goals, to support community-maintained projects. There are two main differences. The first is that Django Commons leans into the GitHub paradigm and centers the organization as a whole within GitHub. This is a risk, given there's some vendor lock-in. However, the repositories are still cloned to several people's machines and the organization controls the keys to PyPI, not GitHub. If something were to occur, it's manageable.

The second is that Django Commons is built from the beginning to have more than one administrator. Jazzband has been working for a while to add additional roadies (administrators), but there hasn't been visible progress. Given the importance of several of these projects it's a major risk to the community at large to have a single point of failure in managing the projects. By being designed from the start to spread the responsibility, it becomes easier to allow people to step back and others to step up, making Django more sustainable and the community stronger.

One of the goals for Django Commons is to be very public about what's going on. We actively encourage use of the Discussions feature in GitHub and have several active conversations happening there now1 2 3

So far we've been able to migrate ~3~ 4 libraries4 5 6 7into Django Commons. Each one has been a great learning experience, not only for the library maintainers, but also for the Django Commons admins.

We're working to automate as much of the work as possible. Daniel Moran has done an amazing job of writing Terraform scripts to help in the automation process.

While there are still several manual steps, with each new library, we discover new opportunities for automation.

This is an exciting project to be a part of. If you're interested in joining us you have a couple of options

- Transfer your project into Django Commons

- Join as member and help contribute to one of the projects that's already in Django Commons

I'm looking forward to seeing you be part of this amazing community!

DjangoCon US 2023

My Experience at DjangoCon US 2023

A few days ago I returned from DjangoCon US 2023 and wow, what an amazing time. The only regret I have is that I didn't take very many pictures. This is something I will need to work on for next year.

On Monday October 16th I gave a talk Contributing to Django or how I learned to stop worrying and just try to fix an ORM Bug. The video will be posted on YouTube in a few weeks. This was the first tech conference I've ever spoken at!!!! I was super nervous leading up to the talk, and even a bit at the start, but once I got going I finally settled in.

Here's me on stage taking a selfie with the crowd behind me

Luckily, my talk was one of the first non-Keynote talks so I was able to relax and enjoy the conference while the rest of the time.

After the conference talks ended on Wednesday I stuck around for the sprints. This is such a great time to be able to work on open source projects (Django adjacent or not) and just generally hang out with other Djangonauts. I was able to do some work on DjangoPackages with Jeff Triplett, and just generally hang out with some truly amazing people.

The Django community is just so great. I've been to many conferences before, but this one is the first where I feel like I belong.

I am having some of those post conference blues, but thankfully Kojo Idrissa wrote something about how to help with that. And taking his advice, it has been helpful to come down from the Conference high.

Although the location of DjangoCon US 2024 hasn't been announced yet, I'm making plans to attend.

I am also setting myself some goals to have completed by the start of DCUS 2024

- join the fundraising working group

- work on at least 1 code related ticket in Trac

- work on at least 1 doc related ticket in Trac

- have been part of a writing group with fellow Djangonauts and posted at least 1 article per month

I had a great experience speaking, and I think I'd like to do it again, but I'm still working through that.

It's a lot harder to give a talk than I thought it would be! That being said, I do have in my 'To Do' app a task to 'Brainstorm DjangoCon talk ideas' so we'll see if (1) I'm able to come up with anything, and (2) I have a talk accepted for 2024.

Contributing to Django or how I learned to stop worrying and just try to fix an ORM Bug

I went to DjangoCon US a few weeks ago and hung around for the sprints. I was particularly interested in working on open tickets related to the ORM. It so happened that Simon Charette was at Django Con and was able to meet with several of us to talk through the inner working of the ORM.

With Simon helping to guide us, I took a stab at an open ticket and settled on 10070. After reviewing it on my own, and then with Simon, it looked like it wasn't really a bug anymore, and so we agreed that I could mark it as done.

Kind of anticlimactic given what I was hoping to achieve, but a closed ticket is a closed ticket! And so I tweeted out my accomplishment for all the world to see.

A few weeks later though, a comment was added that it actually was still a bug and it was reopened.

I was disappointed ... but I now had a chance to actually fix a real bug! I started in earnest.

A suggestion / pattern for working through learning new things that Simon Willison had mentioned was having a public-notes repo on GitHub. He's had some great stuff that he's worked through that you can see here.

Using this as a starting point, I decided to walk through what I learned while working on this open ticket.

Over the course of 10 days I had a 38 comment 'conversation with myself' and it was super helpful!

A couple of key takeaways from working on this issue:

- Carlton Gibson said essentially once you start working a ticket from Trac, you are the world's foremost export on that ticket ... and he's right!

- ... But, you're not working the ticket alone! During the course of my work on the issue I had help from Simon Charette, Mariusz Felisiak, Nick Pope, and Shai Berger

- The ORM can seem big and scary ... but remember, it's just Python

I think that each of these lesson learned is important for anyone thinking of contributing to Django (or other open source projects).

That being said, the last point is one that I think can't be emphasized enough.

The ORM has a reputation for being this big black box that only 'really smart people' can understand and contribute to. But, it really is just Python.

If you're using Django, you know (more likely than not) a little bit of Python. Also, if you're using Django, and have written any models, you have a conceptual understanding of what SQL is trying to do (well enough I would argue) that you can get in there AND make sense of what is happening.

And if you know a little bit of Python a great way to learn more is to get into a project like Django and try to fix a bug.

My initial solution isn't the final one that got merged ... it was a collaboration with 4 people, 2 of whom I've never met in real life, and the other 2 I only just met at DjangoCon US a few weeks before.

While working through this I learned just as much from the feedback on my code as I did from trying to solve the problem with my own code.

All of this is to say, contributing to open source can be hard, it can be scary, but honestly, I can't think of a better place to start than Django, and there are lots of places to start.

And for those of you feeling a bit adventurous, there are plenty of ORM tickets just waiting for you to try and fix them!

Django and Legacy Databases

I work at a place that is heavily investing in the Microsoft Tech Stack. Windows Servers, c#.Net, Angular, VB.net, Windows Work Stations, Microsoft SQL Server ... etc

When not at work, I really like working with Python and Django. I've never really thought I'd be able to combine the two until I discovered the package mssql-django which was released Feb 18, 2021 in alpha and as a full-fledged version 1 in late July of that same year.

Ever since then I've been trying to figure out how to incorporate Django into my work life.

I'm going to use this series as an outline of how I'm working through the process of getting Django to be useful at work. The issues I run into, and the solutions I'm (hopefully) able to achieve.

I'm also going to use this as a more in depth analysis of an accompanying talk I'm hoping to give at Django Con 2022 later this year.

I'm going to break this down into a several part series that will roughly align with the talk I'm hoping to give. The parts will be:

- Introduction/Background

- Overview of the Project

- Wiring up the Project Models

- Database Routers

- Django Admin Customization

- Admin Documentation

- Review & Resources

My intention is to publish one part every week or so. Sometimes the posts will come fast, and other times not. This will mostly be due to how well I'm doing with writing up my findings and/or getting screenshots that will work.

The tool set I'll be using is:

- docker

- docker-compose

- Django

- MS SQL

- SQLite

Page 1 / 4